(This is the inevitable meta-post, the blog post that talks about setting up the blog.)

For the last couple of years I’ve been obsessed with the idea of static websites, websites where all of the compute is done up-front when the site is published. Most websites really don’t need heavy PHP frameworks or slow-starting .NET applications running in response to every single request. If a website doesn’t contain dynamic user-data, and if it’s not updated every few minutes, then it just seems inefficient to construct each page individually for each visitor.

I’ve been longing to build my own static websites, and I even made a start on building a .NET Core app to generate a blog site for me. But then I found Gatsby and instantly fell in love. Even just looking at the homepage of the Gatsby website (and how fast it loaded) I knew I’d found the CMF for me.

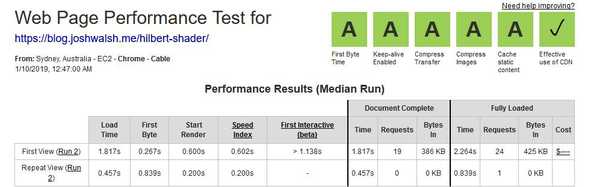

There are plenty of articles online about hosting Gatsby using AWS S3+CloudFront, and there are even a couple of articles about using CodeBuild/CodePipeline for CI/CD (Continuous Integration & Continuous Delivery). But I believe they all over-simplify things, and miss out some real opportunities for improvement. While setting up this blog site my perfectionism kicked in, and I think that I can give you some tips on how to make your website just a little bit better. Plus we’re going to make /r/frugal proud and do it on a shoestring budget. If you already own a domain name, you can run a high-performance website for a few bucks per month.

If you’re making a blog, it’s easiest if you have at least one article pre-prepared. If you try to run a blog with no articles, Gatsby will encounter errors. This is why this blog post about making the blog is not the first post on the blog. I had pre-prepared the Hilbert Shader article.

Objective

The objective of this post is to give you some pointers to help you host a Gatsby website on AWS. If you follow this post, you will end up with two environments, a production environment and a password-protected preview/staging environment. The preview environment will be automatically updated whenever changes are pushed to a branch within a Git repository, and the production environment will be updated after a manual approval step is completed.

We’ll use AWS CodePipeline and CodeBuild for the CI/CD. The site will be hosted statically using S3 and CloudFront.

Normally the golden rule is to make your staging and production environments as close to identical as possible, but in this case there will be some differences due to the fact that it’s not possible to restrict access to S3 Static Website Hosting. The preview environment therefore will not support serverside redirects, may be slightly slower than production, and will cost slightly more per-request. (Don’t worry though, it’ll still be very cheap!)

This is not intended to be a step-by-step guide. I’m assuming you have at least some familiarity with both AWS and JavaScript. If you want a guide that holds your hand a bit more, check out this helpful article.

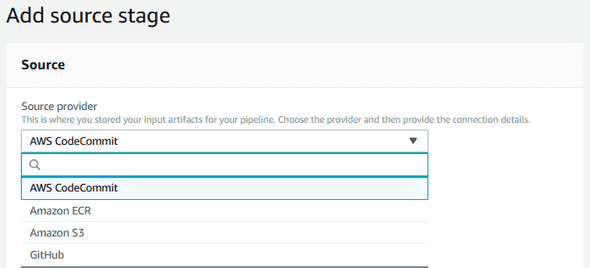

Git repository

Make sure you use a Git provider that’s well supported by CodePipeline. You can find a list of these by creating a CodePipeline. On the second page of the wizard it will ask you to choose a source provider. You should use one of the ones from this dropdown, it makes things a lot easier.

If you search, you’ll find announcements from Amazon about BitBucket being supported by CodePipeline. Don’t believe them! As of the time of writing, BitBucket is technically supported, but really not as well as the other providers. Save yourself some hassle and choose one of the providers listed in the dropdown for a CodePipeline source.

Certificates

When setting up a website, HTTPS certificates are probably something you do near the end, not right at the beginning. But they can sometimes take a little while to be approved and I hate waiting, so I apply for them at the beginning. It’s comforting to know that the certificates will be ready for me by the time I need them.

Use AWS Certificate Manager to apply for the certificates. It’s free, which is pretty sweet. I’d recommend requesting two certificates, one for production and one for a test/preview environment. I’d also recommend adding two domains for each, one with www. and one without. E.g. blog.joshwalsh.me & www.blog.joshwalsh.me for production, and preview.blog.joshwalsh.me & www.preview.blog.joshwalsh.me for preview.

Getting started with Gatsby

The best way to get started with Gatsby is using a Starter. You can find starters for all different purposes here. I used this one because I was setting up a blog.

Plugin recommendations are beyond the scope of this article, but maybe in future I’ll make another post about some of my favourites.

Testing your site

Once you’ve got the basics set up, you’ll probably want to test your site. You can do this by running gatsby develop. This will build your site and run a server so that you can view it. Additionally, it will watch for any changes you make to files and automatically apply those changes, without you even having to refresh the page.

Be aware that if you install/remove plugins or change the config file you will have to restart gatsby develop for the changes to take effect. But most JavaScript, CSS or content changes will take effect immediately.

gatsby develop is a fantastic tool, but because it runs the site in development mode it occasionally might not represent the same results as you’ll get once you build your website for production.

If you’ve developed websites before, chances are you have a web server like nginx or Apache already installed on your computer. Configure a virtualhost (nginx, Apache) on your web server to point to the ‘public’ directory within your Gatsby project. I recommend configuring the address to end in .local, as this conforms with IANA recommendations, e.g. blog.joshwalsh.local.

If you don’t already have a server, Gatsby comes with one. Run gatsby serve --host blog.joshwalsh.local --port 80 and you’ll have it running.

Once your server is configured, you need to modify your hosts file. Add an entry so that whatever local address you specified earlier points to 127.0.0.1.

Finally, you can run gatsby build and then open the local address in a web browser to view the results. Hopefully they look the same as what you saw from gatsby develop, but now you have the confidence to know exactly how the live site will look.

S3

Each environment will require its own S3 bucket. For simplicity, you can give them the same name as the domain they will be hosting (e.g. “blog.joshwalsh.me” and “preview.blog.joshwalsh.me”).

The bucket setup looks a bit different for the two environments.

If you set up a site and don’t use the S3 static website endpoint, at first glance you might think it’s working anyway. You might even question my intelligence for recommending that you do something so clearly unnecessary.

But there’s a catch. Try disabling JavaScript and look at any page other than your homepage. You’ll get a 404 error. Try sharing the link on Facebook, and you’ll most likely see that the preview on Facebook shows as a 404 as well. Why don’t you see these when you visit the link? Well, visiting the link will (assuming you’ve set up your server correctly) load 404.html. This page contains some JavaScript (loader.js) that will use AJAX to load the correct page, masking the issue. This can make it difficult to notice that your pages aren’t initially loading correctly.

For production, you’ll want to set up the site to use S3’s Static Website Hosting feature. You can enable this in the Properties of your bucket. Set your index document to be “index.html” and your error document to be “404.html”. (Interestingly, the index document is directory-relative, while the error document is absolute. This is the useful, but it’s an inconsistency that I can’t find documented anywhere.)

The reason that this is necessary is because of the index.html rewrite. Visitors will access a page like https://blog.joshwalsh.me/hilbert-curve/, and behind the scenes you need to load the object in S3 with the key “hilbert-curve/index.html”. You might think you can use CloudFront’s “Default Root Object” setting for this but, as the name suggests, that will only work in your site’s root, not in any subdirectories.

You’ll also have to enable public access to the bucket. Make sure your Public Access Settings allow you to make the bucket public via bucket policies. (This is a new feature and the documentation for setting up a public bucket hasn’t yet been updated to include it.) Then, configure a public bucket policy.

Now on to the preview bucket. You probably don’t want to set this one up as a static site, as that would allow anyone who can guess the address to access it. Later we’ll talk about password protecting your CloudFront distribution, but that’s going to be pointless if your bucket is wide open. Instead, set up your bucket normally and don’t grant anyone except you access to it. We’ll use a different technique to fix the index.html problem on this environment.

CloudFront

There’s not too much interesting about setting up the CloudFront distribution. Use the Alternative Domain Names (CNAMEs) section to put in both addresses for each environment, and then select Custom SSL Certificate and choose the ones we made in Certificate Manager earlier. You might as well set the Default Root Object to “index.html” as well, I don’t know if it’s necessary but it certainly won’t do any harm.

Make sure that for your production environment you use the bucket’s static website hosting endpoint (e.g. blog.joshwalsh.me.s3-website-ap-southeast-2.amazonaws.com). For your preview environment you use the bucket’s address as the Origin Domain Name (e.g. preview.blog.joshwalsh.me.s3.amazonaws.com, it should appear in the dropdown menu). Enable Restrict Bucket Access and let CloudFront do the work of creating an identity and updating the bucket policy for you.

On your production environment you might also want to customise the caching behaviour to add a Minimum TTL. Gatsby’s caching recommendations (which are implemented by gatsby-plugin-s3) say that HTML files should not be cached, in order to prevent browsers or proxy servers from keeping stale content. CloudFront by default will use these same caching rules, but because we’ll run an invalidation every time we publish changes we don’t need to worry about stale content on CloudFront. By customising the minimum TTL value, we can force CloudFront to cache all files, which should slightly reduce the TTFB (Time-To-First-Byte) time for HTML files. The improvement is minor, but why not squeeze out every bit of performance possible?

If you haven’t used S3 much before, you may be confused as to why it returns 403 instead of 404 when an object can’t be found. The reason for this is to prevent attackers from being able to determine the names of objects within a bucket they don’t have access to, a type of resource enumeration attack.

If AWS returned 404 for objects that didn’t exist, an attacker could try accessing objects with random names and check if they returned 404 or 403 to find out whether or not they exist.

After your distributions are created, go in and edit your preview distribution and go to the Error Pages tab. Create a custom error response for the code 403. Set the response page path to /404.html and the response code to 404.

You’ll need the IDs for the distributions later on. If you make a note of them now, you won’t have to waste time getting them later on.

Preview environment extras

There are two extra things that we need to do for the preview environment. The first is to password protect it, and the second is to apply an index.html rewrite rule, because we aren’t using S3 static website hosting.

We can achieve both using Lambda@Edge. You should be aware that Lambda@Edge is not included within the Free Tier of AWS. However, unless you have thousands of people accessing your preview site every month, these scripts are unlikely to cost more than 0.60USD per month.

For password protection, I found this article which includes a suitable script. I have slightly tweaked the script in order to allow for multiple username/password combinations.

'use strict';

exports.handler = (event, context, callback) => {

// Get request and request headers

const request = event.Records[0].cf.request;

const headers = request.headers;

const users = {

"admin": "password",

};

// Require Basic authentication

if (typeof headers.authorization != 'undefined') {

const authString = headers.authorization[0].value;

const base64 = authString.substring(6);

const combo = Buffer.from(base64, 'base64').toString('utf8').split(':');

const username = combo[0];

const password = combo[1];

if(users[username] === password) {

// Continue request processing if authentication passed

callback(null, request);

}

}

// Otherwise, reject the request

const body = 'Unauthorized';

const response = {

status: '401',

statusDescription: 'Unauthorized',

body: body,

headers: {

'www-authenticate': [{key: 'WWW-Authenticate', value:'Basic'}]

},

};

callback(null, response);

};Once you’ve created the Lambda function, you can attach it to your distribution by adding CloudFront as a trigger, then clicking the “Deploy to Lambda@Edge” button. You’ll want to use the “Viewer Request” event for this one, so that it runs every time someone accesses the site.

As for the index.html thing, Amazon themselves have an excellent article about this topic. I just used the script from there. For this one you need to use the “Origin Request” event, which means it will only run when there’s a cache miss and the result of the rewrite will be cached.

buildspec.yml

Now we’re getting to the exciting part, CI/CD.

CodeBuild uses a file called buildspec.yml to work out how to build your application. Here’s my buildspec.yml:

version: 0.2

phases:

install:

runtime-versions:

nodejs: 12

commands:

- 'touch .npmignore'

- 'npm install -g gatsby'

pre_build:

commands:

- 'npm ci --production'

build:

commands:

- 'npm run-script build'

post_build:

commands:

- 'npm run-script deploy'

artifacts:

base-directory: public

files:

- '**/*'

discard-paths: no

cache:

paths:

- '.cache/*'

- 'public/*'Since the initial publication of this post the CodeBuild images have been updated and it’s now possible to use yarn instead of npm. Yarn can help resolve issues with conflicting dependencies, particularly around sharp. See here for updated build commands that use Yarn.

If anyone figures out how to cache dependencies, please let me know. If I try to add node_modules to the cache then subsequent builds give me an error:

There was a problem loading the local build command. Gatsby may not be installed in your site’s “node_modules” directory. Perhaps you need to run “npm install”? You might need to delete your “package-lock.json” as well.

It’d also be nice if I could cache dependencies installed outside the node_modules directory, such as phantomjs, libvips, and sass.

I got the initial buildspec.yml from this article and slightly modified it. My changes allow for deploying to multiple environments using only a single buildspec.yml file, and slightly improve the speed of builds by storing dependencies and the Gatsby cache between builds.

Configuring gatsby-plugin-s3

We’ll be using gatsby-plugin-s3 to deploy our site to S3. If you haven’t already installed it in your gatsby project, make sure to do so. gatsby-plugin-s3 needs some configuration before it will work correctly, especially when you’re using it with CloudFormation. Open up gatsby-config.js and near the top of it, add the following:

const targetAddress = new URL(process.env.TARGET_ADDRESS || `http://blog.joshwalsh.local`);(This should go above the module.exports line, but beneath any require/import statements)

Next, locate your gatsby-plugin-s3 config block (or add it, if it doesn’t already exist).

{

resolve: `gatsby-plugin-s3`,

options: {

bucketName: process.env.TARGET_BUCKET_NAME || "fake-bucket",

region: process.env.AWS_REGION,

protocol: targetAddress.protocol.slice(0, -1),

hostname: targetAddress.hostname,

acl: null,

params: {

// In case you want to add any custom content types: https://github.com/jariz/gatsby-plugin-s3/blob/master/recipes/custom-content-type.md

},

},

}This sets bucketName to use the TARGET_BUCKET_NAME environment variable if it’s available, otherwise we use “fake-bucket” as a default. We need to put a valid (but possibly non-existent) bucket name as a default because the environment variable will only be present in CodeBuild, and so if we tried to do a build locally gatsby-plugin-s3 would prevent it. If you’d like to be able to deploy to an S3 bucket from your local computer, you can replace “fake-bucket” with a real bucket name.

protocol & hostname are important fields to ensure that serverside redirects work correctly. If these are omitted, any redirects will take users away from your CloudFront Distribution and instead send them straight to the S3 Static Website Hosting Endpoint.

Setting acl explicitly to null will ensure that objects are uploaded without an ACL. ACLs are considered legacy by Amazon and should no longer be used. Plus, if we use ACLs then we have to grant extra IAM permissions.

Configuring gatsby-plugin-canonical-urls

Remember, it’s not possible to restrict access to an S3 Static Website. For this reason, even once your site is all set up with your CloudFront URL (https://blog.joshwalsh.me) it will still also be accessible from the Static Website Hosting Endpoint (http://blog.joshwalsh.me.s3-website-ap-southeast-2.amazonaws.com/).

How can you discourage search engines from linking to this incorrect domain in search results? How do you stop them from penalising you for duplicate content?

The answer is to provide a canonical URL for each page, and fortunately there’s a plugin which makes this very easy. Install gatsby-plugin-canonical-urls and head back into your gatsby-config.js file.

{

resolve: `gatsby-plugin-canonical-urls`,

options: {

siteUrl: targetAddress.href.slice(0, -1),

},

}It’s as simple as that! This uses the same targetAddress variable that we set in the previous section, which is based on the TARGET_BUCKET_NAME environment variable.

NPM scripts

You might have noticed that the build and post_build commands in the buildspec.yml file just reference npm scripts. You will need to add these to the scripts section of package.json.

Your starter probably already came with a build script, but in case it didn’t, here you go:

"build": "gatsby build"As for the deploy script, that’s a bit more involved:

"deploy": "gatsby-plugin-s3 deploy --yes && aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION --paths \"/*\""There are two components to this script. The first command uses gatsby-plugin-s3 to deploy our changes to the S3 bucket:

gatsby-plugin-s3 deploy --yesThe second command invalidates the CloudFront distribution to ensure that CloudFront doesn’t keep serving up its cached copy of the previous version of your site:

aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION --paths "/*"If you for some reason want to wait until the CloudFront invalidation is finished before you count your build as finished, you can do that but it will require some extra work. First you need to extract the distribution ID from the CloudFront response:

aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION \

--paths "/*" | tr '\n' ' ' | sed -r 's/.*"Id": "([^"]*)".*$/\1/'And then you can use command substitution to wait for that invalidation to complete:

aws cloudfront wait invalidation-completed --distribution-id $CLOUDFRONT_DISTRIBUTION \

--id $(aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION --paths "/*" | tr '\n' ' ' | sed -r 's/.*"Id": "([^"]*)".*$/\1/')This will increase the cost of your builds and increase your likelihood of leaving Free Tier, so I really don’t recommend this. To discourage you from doing it, I’m not going to provide a JSON version of this script, so if you really want to do it you’ll have to escape the string yourself.

CodePipeline

Okay, time to put it all together. Create a new CodePipeline pipeline. As part of the wizard, you’ll have to create a build stage, which we’ll use for the preview environment. Use CodeBuild as the provider and create a new project. The project name should reflect that this is the build project for your preview stage. Choose Managed image > Amazon Linux 2 > Standard > aws/codebuild/amazonlinux2-x86_64-standard:2.0 > Always use the latest… Also make sure Environment Type is set to Linux (not Linux GPU) and leave Privileged unchecked.

Expand the Additional configuration section, and add some environment variables:

TARGET_ADDRESSshould contain the address that you want to host your site at (e.g. https://preview.blog.joshwalsh.me/)TARGET_BUCKET_NAMEshould contain the name of your preview bucketCLOUDFRONT_DISTRIBUTIONshould contain the ID of your preview CloudFront distribution

Create the project and finish creating the pipeline.

After the “Build” stage, add an “Approval” stage with a manual approval step. Putting the URL to your preview site in the “URL for review” field might save you a couple of seconds each time you review a change, so I’d recommend doing it.

At this point if you’d like you can delete the “Build” stage and replace it with a “Preview” stage. This is a totally unnecessary step, but I did it because I didn’t like the default “Build” name for the stage, it was annoying me. Unfortunately it seems you can’t edit the name of a stage, so deleting it and remaking it seems to be the only option if you want it changed.

Now, create another stage after the “Approval” stage. I called mine “Go-live”. Create a new CodeBuild action in this stage, and set it up similarly to how you set up the Preview project. But obviously this time you’ll want to change the environment variables to point to your production bucket & CloudFront distribution.

We do a Gatsby build for the preview environment and then we rebuild everything for the production environment. You might be wondering “Why? Wouldn’t it be more efficient for the production build to just copy the files from the preview bucket to the production bucket?”

That certainly would be more efficient, but doing a separate Gatsby build for each environment gives you some additional flexibility. You can add additional environment-specific details (such as API keys) as environment variables in your CodeBuild projects, and reference them in your project’s JavaScript using process.env.YOUR_ENVIRONMENT_VARIABLE.

Additional CodeBuild settings

You’ll now need to edit the CodeBuild projects you just created to set some additional settings. Head on over to CodeBuild and edit the Artifacts for each project. In the “Additional configuration” section, set the Cache Type to S3 and fill out the rest of the details. Make sure that your environments don’t overlap by either giving each environment its own bucket, or giving each its own prefix.

IAM permissions

Because the build scripts perform operations against both S3 and CloudFront, we need to give our CodeBuild service roles access to these. Create an inline policy for each role:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket",

"s3:HeadBucket",

"s3:DeleteObject",

"s3:GetBucketLocation",

"s3:PutBucketWebsite"

],

"Resource": [

"arn:aws:s3:::WEBSITEBUCKETNAME",

"arn:aws:s3:::WEBSITEBUCKETNAME/*",

"arn:aws:s3:::CACHEBUCKETNAME",

"arn:aws:s3:::CACHEBUCKETNAME/*"

]

},

{

"Sid": "1",

"Effect": "Allow",

"Action": [

"cloudfront:GetInvalidation",

"cloudfront:CreateInvalidation"

],

"Resource": "*"

}

]

}Replace WEBSITEBUCKETNAME with the name of the bucket each site is hosted from, use the preview bucket for the preview CodeBuild role and the production bucket for the production CodeBuild role. The s3:HeadBucket permission probably isn’t necessary, but I’m leaving it in just in case.

Replace CACHEBUCKETNAME with the name of the bucket you used in Additional CodeBuild settings.

Finished

And with that, we’re finally done. Push your project to CodeCommit (or whatever VCS provider you’re using) and it should kick off a build. Nothing ever works first try, but after you’ve ironed out whatever bugs you encounter you will be the proud owner of a new website that’s efficient, fast and cheap.